Authors: Chaojun Xiao, Pengle Zhang, Xu Han, Guangxuan Xiao, Yankai Lin, Zhengyan Zhang, Zhiyuan Liu, Song Han, Maosong Sun

Published on: February 07, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2402.04617

Summary

- What is new: Introduces InfLLM, a training-free method that allows large language models to process streaming long sequences without losing the ability to understand long-distance dependencies.

- Why this is important: Existing large language models struggle with processing long sequences due to limitations on their training on shorter sequences, leading to issues with out-of-domain data and distractions.

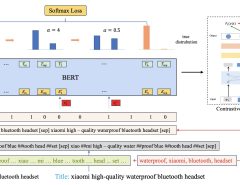

- What the research proposes: The proposed InfLLM method utilizes additional memory units to store distant contexts and employs an efficient mechanism for attention computation, enabling better processing of long sequences.

- Results: InfLLM enables pre-trained large language models to perform better on long sequences without additional training, maintaining superior performance even at sequence lengths of up to 1,024K tokens.

Technical Details

Technological frameworks used: nan

Models used: Large language models (LLMs)

Data used: nan

Potential Impact

This research could disrupt markets heavily reliant on natural language processing, particularly in cybersecurity, finance, and customer service, where understanding of long text sequences is crucial. Companies specializing in AI-driven analytics and customer service automation could greatly benefit.

Want to implement this idea in a business?

We have generated a startup concept here: MemoryStreamAI.

Leave a Reply