Authors: Youjia Wang, Yiwen Wu, Ruiqian Li, Hengan Zhou, Hongyang Lin, Yingwenqi Jiang, Yingsheng Zhu, Guanpeng Long, Jingya Wang, Lan Xu, Jingyi Yu

Published on: February 03, 2024

Impact Score: 8.3

Arxiv code: Arxiv:2402.03944

Summary

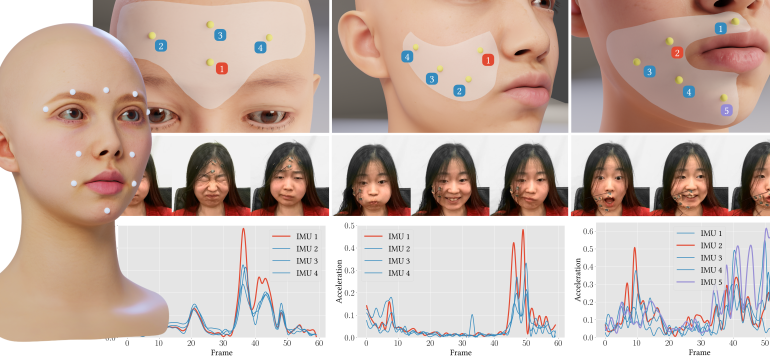

- What is new: A novel approach for facial expression capture using IMU signals, diverging from traditional visual-based solutions.

- Why this is important: Existing facial motion capture solutions rely on visual cues, compromising privacy and faltering with occlusions.

- What the research proposes: Introducing IMUSIC, a methodology using micro-IMUs and an anatomy-driven placement scheme, alongside a unique IMU-ARKit dataset for capturing facial expressions.

- Results: IMUSIC enables accurate facial capture even in scenarios where visual methods fail, ensuring user privacy and opening up new applications such as hybrid capture and detection of minute facial movements.

Technical Details

Technological frameworks used: Transformer diffusion model

Models used: Two-stage training strategy for facial blendshape parameter prediction

Data used: IMU-ARKit dataset providing paired IMU/visual signals

Potential Impact

Facial recognition, gaming, VR/AR, health monitoring, and security industries could all see disruptions or benefits from IMUSIC’s insights.

Want to implement this idea in a business?

We have generated a startup concept here: FaceGest.

Leave a Reply