Authors: Edward Kim, Isamu Isozaki, Naomi Sirkin, Michael Robson

Published on: July 04, 2023

Impact Score: 8.2

Arxiv code: Arxiv:2307.01898

Summary

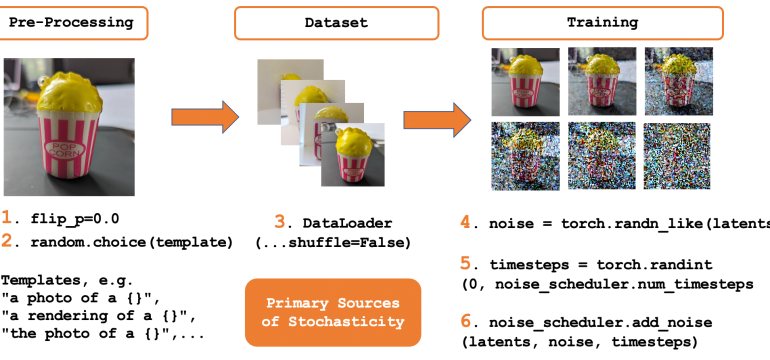

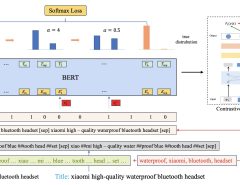

- What is new: The research introduces a method to reproducibly verify the outputs of generative AI models in a decentralized network, focusing on minimizing stochasticity in AI training for better verification and consensus.

- Why this is important: The reproducibility of generative AI model outputs is challenging due to their inherent non-determinism, affecting trust and transparency in scientific research.

- What the research proposes: Utilizing locality sensitive hash comparisons and a decentralized verification network to evaluate the correctness and reproducibility of generative AI outputs, with techniques to reduce randomness in AI training.

- Results: Successfully detected perceptual collisions in AI-generated images with a 99.89% probability and achieved a 100% consensus on the correctness of large language model outputs using consensus mechanisms.

Technical Details

Technological frameworks used: Decentralized verification network, locality sensitive hashing

Models used: Open source diffusion models, large language models

Data used: Artificially generated data samples from various generative AI models

Potential Impact

AI development and deployment platforms, companies relying on generative AI for content creation, and AI verification service providers

Want to implement this idea in a business?

We have generated a startup concept here: AI Veracity.

Leave a Reply