Authors: Flavio Martinelli, Berfin Simsek, Wulfram Gerstner, Johanni Brea

Published on: April 25, 2023

Impact Score: 8.12

Arxiv code: Arxiv:2304.12794

Summary

- What is new: The ‘Expand-and-Cluster’ method uniquely identifies neural network parameters despite known challenges.

- Why this is important: Identifying neural network parameters just from input-output mappings is difficult due to permutation, overparameterisation, and activation function symmetries.

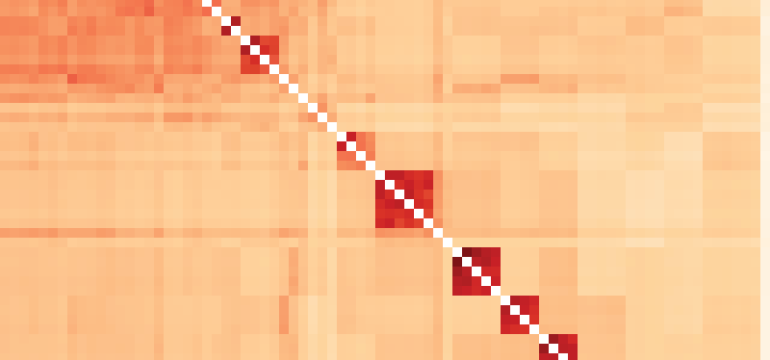

- What the research proposes: A two-phase ‘Expand-and-Cluster’ method that first expands the student networks to imitate the target network, then uses clustering to identify shared weight vectors.

- Results: Successful recovery of parameters and size of shallow and deep networks with less than 10% neuron number overhead, plus an ‘ease-of-identifiability’ analysis of 150 synthetic problems.

Technical Details

Technological frameworks used: nan

Models used: Expand-and-Cluster method, shallow and deep neural networks

Data used: 150 synthetic problems

Potential Impact

AI development and analytics services, companies relying on proprietary neural network architectures

Want to implement this idea in a business?

We have generated a startup concept here: NeuraMatch.

Leave a Reply