Authors: Yihan Wang, Yifan Zhu, Xiao-Shan Gao

Published on: February 06, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2402.04010

Summary

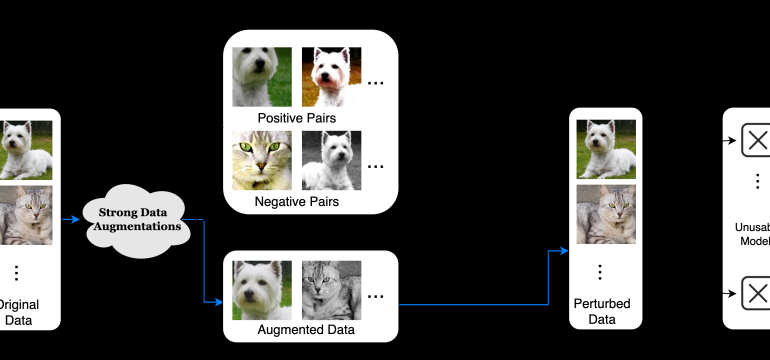

- What is new: Introduces attacks (AUE and AAP) that achieve state-of-the-art unlearnability for both Supervised Learning (SL) and Contrastive Learning (CL) algorithms with reduced computational consumption.

- Why this is important: Existing methods fail to provide unlearnability for both SL and CL algorithms, leaving data protection at risk.

- What the research proposes: Proposes AUE and AAP attacks using contrastive-like data augmentations in supervised error frameworks to effectively prevent both SL and CL from training usable models.

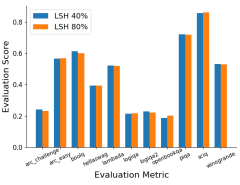

- Results: Verified AUE and AAP attacks achieve superior worst-case unlearnability for SL and CL while consuming less computation, showing potential for application in real-world settings.

Technical Details

Technological frameworks used: Supervised and contrastive error minimization/maximization

Models used: AUE (Adversarial Unlearnability Enhancement) and AAP (Adversarial Augmentation for Protection)

Data used: Commercial datasets and private data

Potential Impact

Data protection services, cybersecurity firms, and industries relying on high-volume data collection and analysis could significantly benefit or need to adapt.

Want to implement this idea in a business?

We have generated a startup concept here: DataGuardAI.

Leave a Reply