Authors: Natasha Butt, Blazej Manczak, Auke Wiggers, Corrado Rainone, David Zhang, Michaël Defferrard, Taco Cohen

Published on: February 07, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2402.04858

Summary

- What is new: Introduction of the Code Iteration (CodeIt) method for language model self-improvement, marking a novel approach in the field.

- Why this is important: Large language models struggle with benchmarks of general intelligence such as the Abstraction and Reasoning Corpus (ARC).

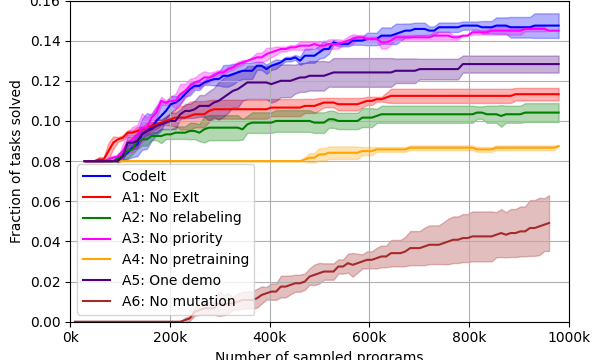

- What the research proposes: The CodeIt method iterates between program sampling/hindsight relabeling and learning from prioritized experience replay to improve task-solving abilities.

- Results: CodeIt outperforms existing neural and symbolic baselines by solving 15% of ARC evaluation tasks, achieving state-of-the-art performance.

Technical Details

Technological frameworks used: CodeIt with prioritized experience replay and data-augmentation

Models used: Large language models

Data used: Abstraction and Reasoning Corpus (ARC)

Potential Impact

Educational technology, artificial intelligence research companies, and industries relying on complex problem-solving automation.

Want to implement this idea in a business?

We have generated a startup concept here: IntellectCode.

Leave a Reply