Authors: Wei Huang, Yangdong Liu, Haotong Qin, Ying Li, Shiming Zhang, Xianglong Liu, Michele Magno, Xiaojuan Qi

Published on: February 06, 2024

Impact Score: 8.3

Arxiv code: Arxiv:2402.04291

Summary

- What is new: BiLLM introduces a pioneering 1-bit post-training quantization scheme specific for pretrained LLMs, achieving unparalleled accuracy with significantly reduced model size.

- Why this is important: Pretrained large language models (LLMs) are resource-intensive, requiring substantial memory and computational power.

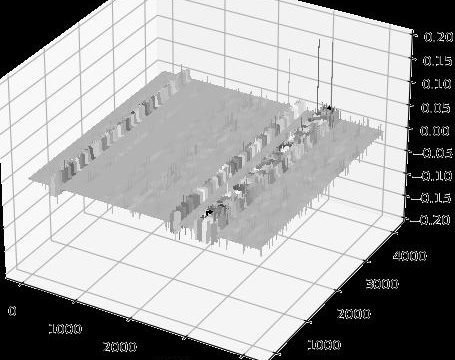

- What the research proposes: BiLLM employs a novel methodology that identifies and selectively binarizes salient weights, minimizing compression loss, and groups non-salient weights for accurate binarization, reducing model size while maintaining high accuracy.

- Results: BiLLM has demonstrated high-accuracy inference with remarkably low bit-width weights (1.08-bit) across various LLMs, significantly outperforming existing quantization methods, and accomplished the binarization of a 7 billion weight LLM within 0.5 hours on a single GPU.

Technical Details

Technological frameworks used: nan

Models used: BiLLM utilizes a combination of binary residual approximation and optimal splitting search based on the weight distribution of LLMs.

Data used: nan

Potential Impact

Tech companies leveraging LLMs for NLP tasks could see reduced operational costs and increased efficiency; cloud service providers may need to adjust pricing models due to the decreased computational requirements.

Want to implement this idea in a business?

We have generated a startup concept here: LiteNLP.

Leave a Reply