Authors: Michael De’Shazer

Published on: February 06, 2024

Impact Score: 8.12

Arxiv code: Arxiv:2402.04140

Summary

- What is new: A novel approach in using Advanced Language Models (ALMs) and AI to analyze and assess biases in court judgments from five different countries, employing a human-AI collaborative framework for a more consistent application of laws.

- Why this is important: The challenge of identifying and mitigating human biases in legal analyses and ensuring the consistent application of laws across various jurisdictions.

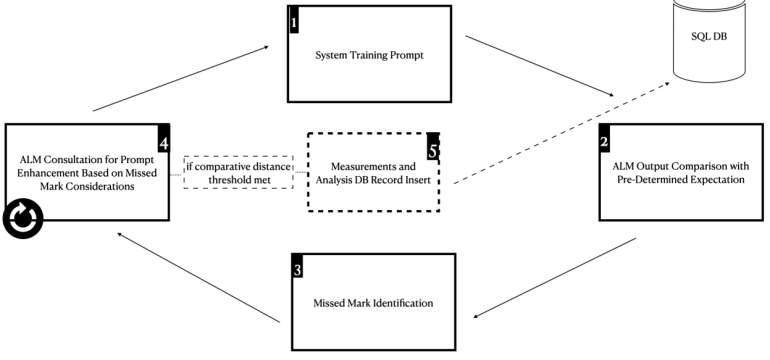

- What the research proposes: Introduction of SHIRLEY and SAM applications for detecting biases and inconsistencies in judgments, and SARA for semi-autonomous arbitration to evaluate these findings critically, based on AI and human collaboration.

- Results: The system successfully identified logical inconsistencies and biases across different legal systems and facilitated a nuanced, coherent argumentation process in ensuring law application integrity.

Technical Details

Technological frameworks used: Grounded Theory-based research design, human-AI collaborative framework

Models used: Advanced Language Models (ALMs) based on OpenAI’s GPT technology

Data used: Court judgments from the United States, the United Kingdom, Rwanda, Sweden, and Hong Kong

Potential Impact

Legal analytics firms, law firms, legal departments in corporations and governmental bodies, AI technology providers in the legal sector

Want to implement this idea in a business?

We have generated a startup concept here: FairVerdict AI.

Leave a Reply