Authors: Yufei Wang, Zhanyi Sun, Jesse Zhang, Zhou Xian, Erdem Biyik, David Held, Zackory Erickson

Published on: February 06, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2402.03681

Summary

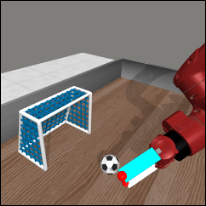

- What is new: A method called RL-VLM-F that generates reward functions automatically for RL agents using text descriptions of tasks and visual observations.

- Why this is important: Designing effective reward functions in Reinforcement Learning requires extensive human effort and trial-and-error.

- What the research proposes: RL-VLM-F leverages feedback from vision language foundation models to generate reward functions based on text descriptions of tasks and visual observations.

- Results: RL-VLM-F successfully produces effective rewards and policies across various domains without the need for human supervision, outperforming prior methods.

Technical Details

Technological frameworks used: Reinforcement Learning (RL), Vision Language Foundation Models (VLMs)

Models used: Not specified

Data used: Image observations, text descriptions of task goals

Potential Impact

Gaming, Robotics, Automation software, Educational tech, AI development platforms

Want to implement this idea in a business?

We have generated a startup concept here: AutoReinforce.

Leave a Reply