Authors: Sangyu Han, Yearim Kim, Nojun Kwak

Published on: January 25, 2024

Impact Score: 8.3

Arxiv code: Arxiv:2402.03348

Summary

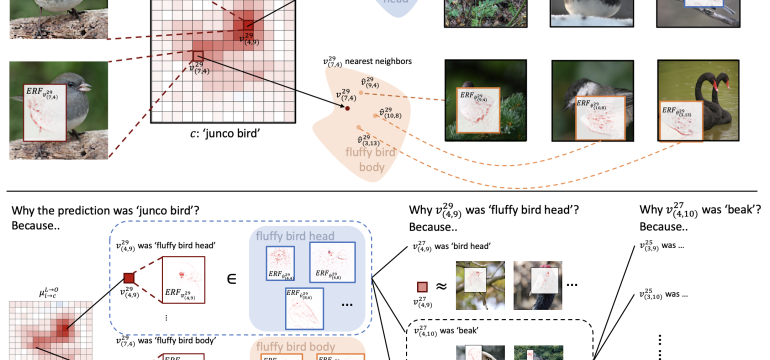

- What is new: Introduction of SRD (Sharing Ratio Decomposition) for explainable AI, focusing on a vector perspective and considering inactive neurons via Activation-Pattern-Only Prediction (APOP).

- Why this is important: Existing explanation methods in AI do not accurately reflect the model’s decision-making process and are vulnerable to adversarial attacks.

- What the research proposes: A new method, SRD, that faithfully represents the model’s inference process, emphasizing both active and inactive neurons, and enhancing robustness.

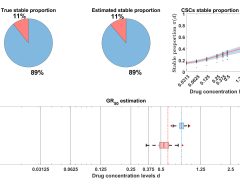

- Results: SRD results in significantly more robust explanations by accurately incorporating nonlinear interactions between filters and providing detailed analysis through high-resolution Effective Receptive Fields.

Technical Details

Technological frameworks used: Explainable AI (XAI)

Models used: SRD (Sharing Ratio Decomposition)

Data used: Pointwise Feature Vector (PFV)

Potential Impact

Tech companies involved in AI development, cybersecurity firms, and sectors relying on AI decision-making such as finance, healthcare, and automotive industries.

Want to implement this idea in a business?

We have generated a startup concept here: DeepInsightAI.

Leave a Reply