Authors: Sungdong Kim, Minjoon Seo

Published on: February 02, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2402.03469

Summary

- What is new: The introduction of a preference-free approach called Regularized Relevance Reward ($R^3$) that improves alignment of LLMs with human values without requiring human preference datasets.

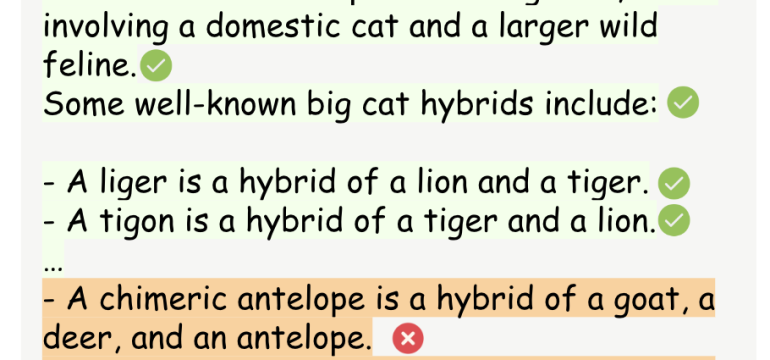

- Why this is important: Reward models trained on human preference datasets tend to favor longer off-topic responses over shorter relevant ones.

- What the research proposes: A new method, $R^3$, that uses a mix of reward functions to focus on relevance instead of preference, aiming to align better with human values.

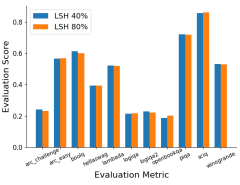

- Results: $R^3$ outperforms existing open-source reward models in aligning with human preferences and demonstrates generalizability across different model sizes and types without extra dataset costs.

Technical Details

Technological frameworks used: Reinforcement learning with Regularized Relevance Reward ($R^3$)

Models used: Large Language Models (LLMs), retriever models

Data used: Human preference databases for benchmarking, no new datasets required for $R^3$ application

Potential Impact

Companies building or utilizing chatbots, customer service AIs, and any entity engaging in large-scale natural language understanding and generation could benefit. AI development platforms and services could see disruptions as $R^3$ provides a new standard for model alignment.

Want to implement this idea in a business?

We have generated a startup concept here: RelevoAI.

Leave a Reply