Authors: Sergio Calvo-Ordonez, Matthieu Meunier, Francesco Piatti, Yuantao Shi

Published on: February 05, 2024

Impact Score: 8.3

Arxiv code: Arxiv:2402.03495

Summary

- What is new: Introduction of Partially Stochastic Infinitely Deep Bayesian Neural Networks, integrating partial stochasticity to improve computational efficiency during training and inference.

- Why this is important: Existing infinitely deep neural networks face limitations in computational efficiency and robustness.

- What the research proposes: A novel family of architectures blending partial stochasticity with infinitely deep neural networks to enhance efficiency and performance.

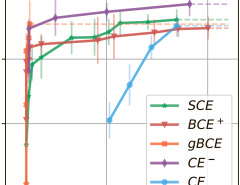

- Results: Empirical evaluations demonstrate improved performance and efficiency over traditional models.

Technical Details

Technological frameworks used: Partially Stochastic Infinitely Deep Bayesian Neural Networks

Models used: Universal Conditional Distribution Approximators

Data used: Varies across multiple tasks for empirical evaluation

Potential Impact

This innovation could disrupt markets that depend on deep learning for tasks requiring robustness and efficiency, such as autonomous vehicles, healthcare analytics, and finance.

Want to implement this idea in a business?

We have generated a startup concept here: InfinitiQ.

Leave a Reply