Authors: Giacomo Pedretti, John Moon, Pedro Bruel, Sergey Serebryakov, Ron M. Roth, Luca Buonanno, Archit Gajjar, Tobias Ziegler, Cong Xu, Martin Foltin, Paolo Faraboschi, Jim Ignowski, Catherine E. Graves

Published on: April 03, 2023

Impact Score: 8.15

Arxiv code: Arxiv:2304.01285

Summary

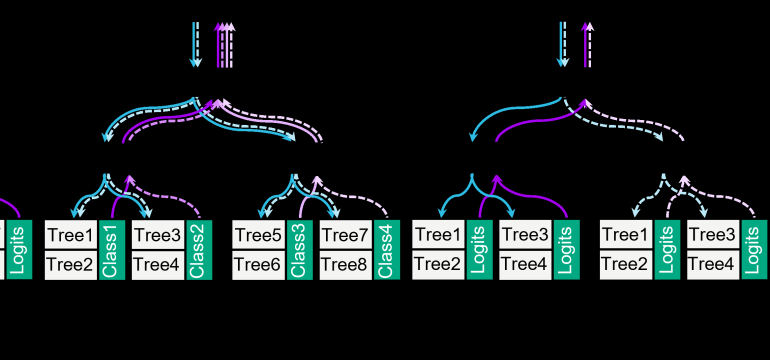

- What is new: An analog-digital architecture with increased precision analog CAM and a programmable network on chip for inference of tree-based ML models.

- Why this is important: Deep learning models are less accurate for tabular data, and hardware acceleration has mostly ignored non-neural network ML models.

- What the research proposes: An efficient architecture that implements state-of-the-art tree-based ML models like XGBoost and CatBoost with reduced model inference latency.

- Results: 119x lower latency and 9740x higher throughput than state-of-the-art GPUs, with 19W peak power consumption.

Technical Details

Technological frameworks used: Analog-digital architecture

Models used: XGBoost, CatBoost

Data used: Structured (tabular) data

Potential Impact

Markets relying on real-time data science applications, companies in the field of scientific discovery, simulation, and AI hardware manufacturers.

Want to implement this idea in a business?

We have generated a startup concept here: SwiftML.

Leave a Reply