Authors: Yong Liu, Haoran Zhang, Chenyu Li, Xiangdong Huang, Jianmin Wang, Mingsheng Long

Published on: February 04, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2402.02368

Summary

- What is new: Early development of large time series models (LTSM) using GPT-style architecture, pre-trained on large-scale datasets.

- Why this is important: Deep models face performance bottlenecks in small-sample scenarios in time series analysis, and the absence of large models that can handle these with advanced generalization and scalability.

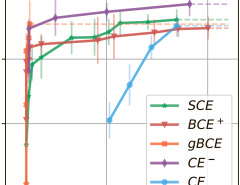

- What the research proposes: A Time Series Transformer (Timer), a LTSM with GPT-style architecture that’s pre-trained on datasets up to 1 billion time points. It’s designed to handle forecasting, imputation, and anomaly detection by converting these into a unified generative task.

- Results: Timer, when fine-tuned, showed promising abilities in diverse application needs over traditional models.

Technical Details

Technological frameworks used: GPT-style architecture for LTSMs

Models used: Time Series Transformer (Timer)

Data used: Curated large-scale datasets with up to 1 billion time points

Potential Impact

Companies in finance, healthcare, and retail that rely on time series forecasting and analysis could see significant benefits or disruption from the adoption of LTSM technologies like Timer.

Want to implement this idea in a business?

We have generated a startup concept here: TimerAI.

Leave a Reply