Authors: Justin Kang, Ramtin Pedarsani, Kannan Ramchandran

Published on: January 30, 2023

Impact Score: 8.27

Arxiv code: Arxiv:2301.13336

Summary

- What is new: Introduces the concept of fair compensation for user data that considers privacy constraints, using principles akin to the Shapley value.

- Why this is important: How to fairly compensate users for their data while considering varying privacy preferences.

- What the research proposes: An axiomatic approach to defining fairness in user data compensation with privacy level considerations, paired with a heterogeneous federated learning model.

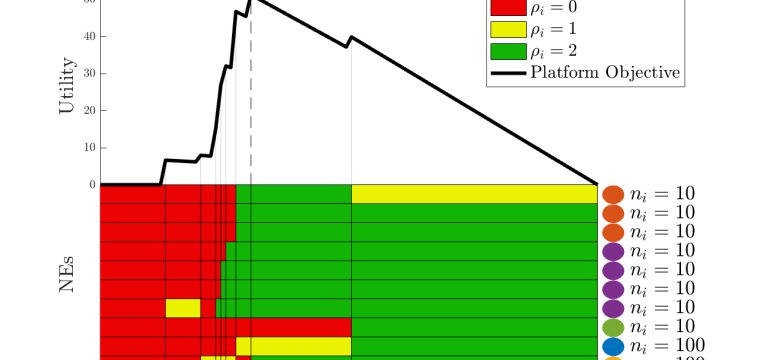

- Results: Identified that platforms will adjust incentives based on users’ privacy sensitivity, with a nuanced strategy for intermediate privacy sensitivities.

Technical Details

Technological frameworks used: Axiomatic fairness, Federated learning

Models used: Shapley value based models

Data used: User data with privacy levels

Potential Impact

Data aggregation platforms, market research firms, companies reliant on user data for AI training.

Want to implement this idea in a business?

We have generated a startup concept here: FairShare.

Leave a Reply