StreamlineAI

Elevator Pitch: Imagine a world where language models understand and process entire books in milliseconds, rather than hours. StreamlineAI makes this possible with cutting-edge technology that efficiently scales LLMs for real-time applications. Our platform unlocks new potential for businesses by dramatically reducing computational costs and enabling instant insights from vast amounts of data. Join the revolution and stream your way to the forefront of AI innovation.

Concept

A cloud-based platform to deploy Large Language Models (LLMs) optimized for streaming applications with super-long contexts.

Objective

To enable businesses and developers to utilize LLMs for streaming applications with extensive content without prohibitive computational costs.

Solution

Using a novel algorithm that operates with sublinear space complexity, StreamlineAI significantly reduces the memory and time required to process very long contexts in real-time applications.

Revenue Model

Subscription-based SaaS with tiered pricing depending on usage volume and required computational power.

Target Market

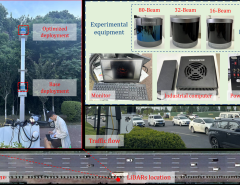

Tech companies in media, analysis, surveillance, and any firms requiring real-time data processing with LLMs.

Expansion Plan

Gradually incorporate additional AI and machine learning models and extend services to IoT, automatic summarization, and real-time translation sectors.

Potential Challenges

Ensuring the continuous improvement of the algorithm to handle ever-increasing context sizes and strict real-time processing requirements.

Customer Problem

Existing solutions struggle with the computational demands of processing extremely long contexts in real-time streaming applications, leading to high costs and resource consumption.

Regulatory and Ethical Issues

Compliance with data privacy laws, especially GDPR for processing data in the EU, and ensuring the ethical usage of AI technology.

Disruptiveness

StreamlineAI has the potential to disrupt the market by eliminating current computational barriers, making LLM application in real-time contexts feasible and cost-effective.

Check out our related research summary: here.

Leave a Reply