Authors: Wuxuan Jiang, Xiangjun Song, Shenbai Hong, Haijun Zhang, Wenxin Liu, Bo Zhao, Wei Xu, Yi Li

Published on: February 04, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2402.0232

Summary

- What is new: Spin introduces optimized protocols for non-linear functions and novel optimizations for attention in Transformer models, utilizing GPU, CPU, and RDMA for acceleration.

- Why this is important: Multi-party computation (MPC) frameworks struggle with accuracy and efficiency, especially in machine learning contexts.

- What the research proposes: A GPU-accelerated MPC framework called Spin that supports multiple computation parties and offers optimized protocols and novel optimizations for enhanced performance.

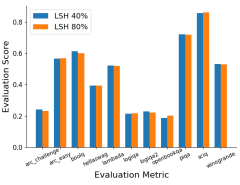

- Results: Spin achieves up to 2x speed improvements over existing solutions for deep neural network training and improves efficiency, communication, and accuracy for Transformer model inference.

Technical Details

Technological frameworks used: Spin

Models used: CNNs, Transformer models

Data used: Not specified

Potential Impact

Companies in the machine learning, cybersecurity, and cloud services markets.

Want to implement this idea in a business?

We have generated a startup concept here: SecureSpinAI.

Leave a Reply