Authors: Zihan Li, Yuan Zheng, Dandan Shan, Shuzhou Yang, Qingde Li, Beizhan Wang, Yuanting Zhang, Qingqi Hong, Dinggang Shen

Published on: February 03, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2402.02029

Summary

- What is new: Introduces a CNN-Transformer hybrid model, ScribFormer, with a triple-branch structure for better scribble-supervised medical image segmentation.

- Why this is important: Existing CNN frameworks struggle to learn global shape information from scribble annotations due to their local receptive field.

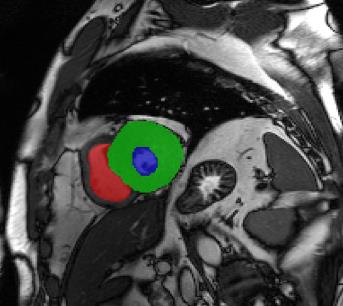

- What the research proposes: ScribFormer leverages a CNN branch, a Transformer branch, and an attention-guided class activation map (ACAM) branch to fuse local and global features for improved segmentation.

- Results: Outperforms state-of-the-art scribble-supervised segmentation methods and achieves better results than fully-supervised methods on public and private datasets.

Technical Details

Technological frameworks used: CNN-Transformer hybrid

Models used: ScribFormer with CNN branch, Transformer branch, ACAM branch

Data used: Two public datasets, one private dataset

Potential Impact

Healthcare imaging, medical device companies, AI-driven diagnostic software providers

Want to implement this idea in a business?

We have generated a startup concept here: ScribScan.

Leave a Reply