Authors: Yun-Wei Chu, Dong-Jun Han, Seyyedali Hosseinalipour, Christopher G. Brinton

Published on: February 03, 2024

Impact Score: 8.15

Arxiv code: Arxiv:2402.02225

Summary

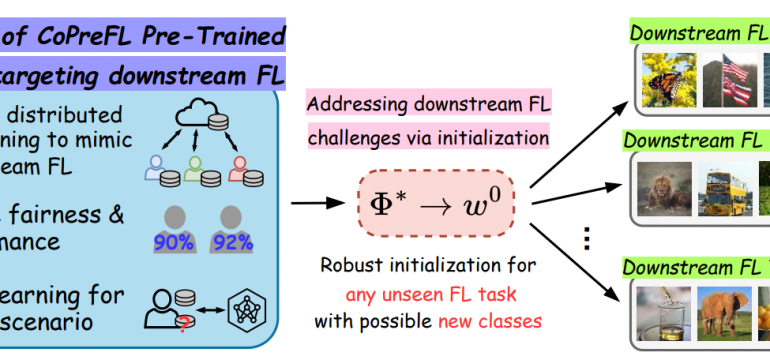

- What is new: Introduction of CoPreFL, a collaborative pre-training approach designed for better initializations in federated learning tasks.

- Why this is important: Most federated learning methodologies start from a randomly initialized model, which may not be optimal.

- What the research proposes: A meta-learning based pre-training algorithm, CoPreFL, that adapts to any downstream federated learning task by mimicking distributed scenarios and focusing on both average performance and fairness.

- Results: CoPreFL provides robust initializations that enhance average performance and fairness in unseen downstream federated learning tasks.

Technical Details

Technological frameworks used: CoPreFL, a collaborative pre-training approach based on meta-learning

Models used: Federated learning models

Data used: Distributed scenarios simulations

Potential Impact

Sectors relying on federated learning models, such as healthcare, finance, and IoT, could see improvements in model performance and fairness.

Want to implement this idea in a business?

We have generated a startup concept here: FairStartAI.

Leave a Reply