Authors: Ziquan Liu, Zhuo Zhi, Ilija Bogunovic, Carsten Gerner-Beuerle, Miguel Rodrigues

Published on: February 04, 2024

Impact Score: 8.35

Arxiv code: Arxiv:2402.02629

Summary

- What is new: A new method to certify machine learning model safety against adversarial attacks with statistical guarantees.

- Why this is important: State-of-the-art machine learning models are vulnerable to adversarial perturbations.

- What the research proposes: Introducing $(\\alpha,\\zeta)$ safety certification and Bayesian optimization algorithms to statistically certify and assess machine learning models’ robustness against adversarial attacks.

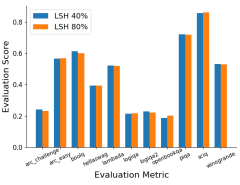

- Results: ViT models are found to be generally more robust against adversarial attacks than ResNet models, with larger ViT models being more robust than smaller ones.

Technical Details

Technological frameworks used: Bayesian optimization, hypothesis testing

Models used: Vision Transformer (ViT), ResNet

Data used: Calibration set

Potential Impact

Cybersecurity, AI development companies, regulatory bodies

Want to implement this idea in a business?

We have generated a startup concept here: CertifyAI.

Leave a Reply