Authors: Xi Li, Jiaqi Wang

Published on: February 02, 2024

Impact Score: 8.15

Arxiv code: Arxiv:2402.01857

Summary

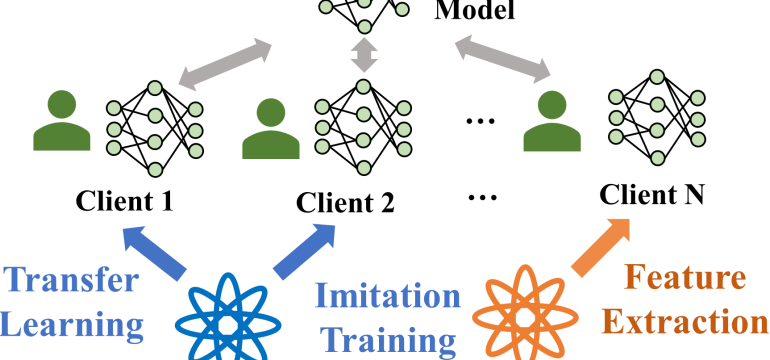

- What is new: Incorporates Foundation Models (FMs) into Federated Learning (FL) to address data and computational challenges while examining new issues of robustness, privacy, and fairness.

- Why this is important: FL faces challenges with limited data and variability in computational resources, impacting performance and scalability.

- What the research proposes: A systematic evaluation of FM-FL integration impacts, proposing criteria and strategies for addressing robustness, privacy, and fairness issues.

- Results: Identifies trade-offs, threats, and issues in the FM-FL integration, and highlights research directions for reliable, secure, and equitable FL systems.

Technical Details

Technological frameworks used: Foundation Models in Federated Learning

Models used: Not explicitly mentioned

Data used: Not explicitly mentioned

Potential Impact

Could impact tech companies engaged in decentralized computing, data privacy-focused firms, and organizations involved in AI and machine learning development.

Want to implement this idea in a business?

We have generated a startup concept here: FoundationSync.

Leave a Reply