Authors: Peijie Dong, Lujun Li, Xinglin Pan, Zimian Wei, Xiang Liu, Qiang Wang, Xiaowen Chu

Published on: February 03, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2402.02105

Summary

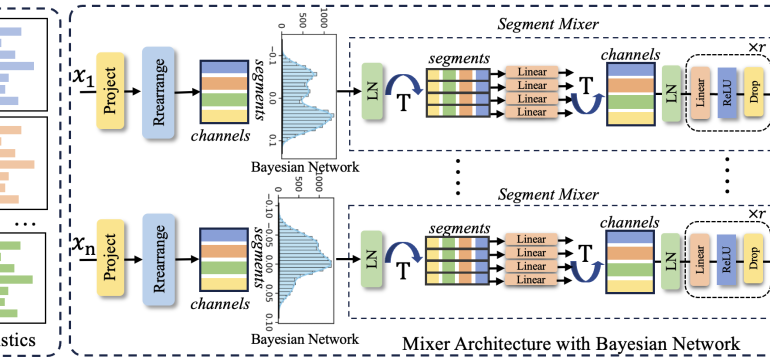

- What is new: Introduction of Parametric Zero-Cost Proxies (ParZC) to manage node-specific uncertainties in neural networks, and a novel Mixer Architecture with Bayesian Network (MABN) alongside a differentiable loss function, DiffKendall, for improved performance estimation.

- Why this is important: Existing zero-cost proxies for neural architecture search aggregate node-wise statistics indiscriminately, failing to account for varying contributions of different nodes to performance estimation.

- What the research proposes: A new method called ParZC, employing a Mixer Architecture with Bayesian Network to accurately explore node-wise statistics and an innovative loss function, DiffKendall, to optimize architecture ranking efficiency.

- Results: ParZC demonstrated superior results on NAS-Bench-101, 201, and NDS benchmarks and showcased its versatility in adapting to the Vision Transformer search space.

Technical Details

Technological frameworks used: Parametric Zero-Cost Proxies (ParZC), Mixer Architecture with Bayesian Network (MABN)

Models used: Vision Transformer

Data used: NAS-Bench-101, 201, NDS

Potential Impact

Cloud computing services, AI infrastructure providers, and companies investing in automated machine learning solutions could benefit or face disruption from the insights in this paper.

Want to implement this idea in a business?

We have generated a startup concept here: OptiNetAI.

Leave a Reply