Authors: Xiaolong Jin, Zhuo Zhang, Xiangyu Zhang

Published on: January 25, 2024

Impact Score: 8.2

Arxiv code: Arxiv:2402.01706

Summary

- What is new: The researchers developed a cost-effective method for exposing latent alignment issues in LLMs by systematically constructing many contexts, or ‘worlds’, through a Domain Specific Language.

- Why this is important: Alignment problems in LLMs, where they can be induced to produce malicious content through jailbreaking techniques.

- What the research proposes: A novel approach to detect alignment issues by creating various ‘worlds’ using a specific language and compiler to systematically expose these issues.

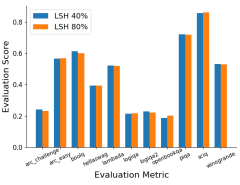

- Results: The method outperforms state-of-the-art jailbreaking techniques in effectiveness and efficiency, revealing LLMs’ vulnerabilities particularly in nesting and programming language worlds.

Technical Details

Technological frameworks used: Domain Specific Language (DSL) for world construction

Models used: Large Language Models (LLMs)

Data used: Various contexts/worlds described using the DSL

Potential Impact

Companies relying on LLMs for content generation, AI development firms, cybersecurity firms

Want to implement this idea in a business?

We have generated a startup concept here: VirtuAlign.

Leave a Reply