Authors: Guangyin Bao, Qi Zhang, Duoqian Miao, Zixuan Gong, Liang Hu, Ke Liu, Yang Liu, Chongyang Shi

Published on: December 21, 2023

Impact Score: 8.22

Arxiv code: Arxiv:2312.13508

Summary

- What is new: Introduces a prototype library to handle modality missing in multimodal federated learning, enhancing model performance during both training and testing.

- Why this is important: Multimodal federated learning suffers from modality missing, leading to constraints on federated frameworks and decreased model accuracy.

- What the research proposes: A prototype-based method that uses prototypes as masks for missing modalities to improve training loss calibration and support uni-modality inference.

- Results: Improved inference accuracy by 3.7% with 50% modality missing during training and by 23.8% during uni-modality inference.

Technical Details

Technological frameworks used: FedAvg-based Federated Learning

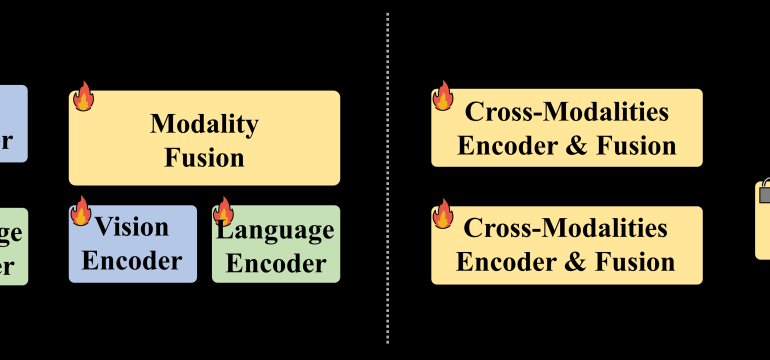

Models used: Modality-specific encoders, modality fusion modules

Data used: nan

Potential Impact

Companies and markets relying on federated learning systems, especially in healthcare, finance, and IoT, may benefit from or need to adapt to these insights.

Want to implement this idea in a business?

We have generated a startup concept here: ProtoSync AI.

Leave a Reply