Authors: Zihan Zhou, Honghao Wei, Lei Ying

Published on: September 27, 2023

Impact Score: 8.35

Arxiv code: Arxiv:2309.15395

Summary

- What is new: Introduces a new algorithm, PRI, which leverages the structural property of CMDPs called limited stochasticity for identifying near-optimal policies with high probability and low regret.

- Why this is important: Existing model-free algorithms for CMDPs do not ensure convergence to an optimal policy and only offer average performance.

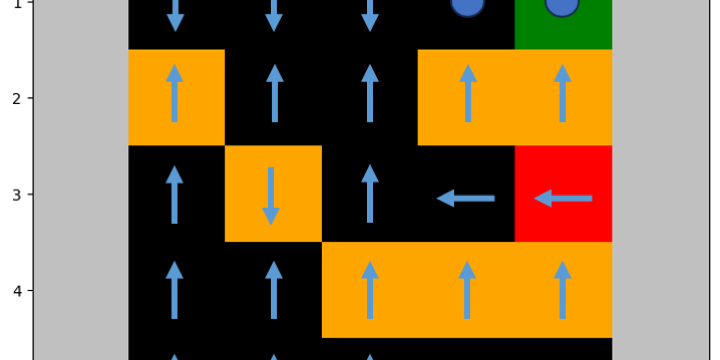

- What the research proposes: The PRI algorithm identifies crucial steps and states for stochastic decisions and fine-tunes these decisions to achieve near-optimal policies.

- Results: PRI guarantees significantly lower regret and constraint violation compared to current best models, promising more efficient and accurate policy identification.

Technical Details

Technological frameworks used: Constrained Markov Decision Processes (CMDPs) with the Pruning-Refinement-Identification (PRI) algorithm

Models used: Model-free learning

Data used: CMDP environments with defined states, actions, and constraints

Potential Impact

Companies in logistics, automated trading, healthcare, and any sector requiring decision-making under uncertainty could significantly benefit or adjust strategies based on these insights.

Want to implement this idea in a business?

We have generated a startup concept here: OptiPolicer.

Leave a Reply