Authors: Zhe Li, Laurence T. Yang, Bocheng Ren, Xin Nie, Zhangyang Gao, Cheng Tan, Stan Z. Li

Published on: February 03, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2402.02045

Summary

- What is new: A novel framework MLIP that integrates language information into the visual domain for medical representation learning, using domain-specific medical knowledge.

- Why this is important: Lack of annotated data for medical image analysis and underutilization of image-text information due to existing models’ inability to handle multi-granularity medical visual representations.

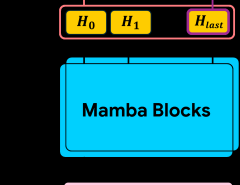

- What the research proposes: MLIP framework that employs image-text contrastive learning powered by domain-specific medical knowledge to improve visual representation learning across different granularities.

- Results: MLIP outperforms state-of-the-art methods in tasks like image classification, object detection, and semantic segmentation, demonstrating improved transfer performance with limited annotated data.

Technical Details

Technological frameworks used: MLIP

Models used: Divergence encoder, Token-knowledge-patch alignment, Knowledge-guided category-level contrastive learning

Data used: Medical reports and corresponding images

Potential Impact

Healthcare imaging, AI-driven diagnostic tools, Medical research organizations

Want to implement this idea in a business?

We have generated a startup concept here: MLIP MedInsight.

Leave a Reply