Authors: Husein Zolkepli, Aisyah Razak, Kamarul Adha, Ariff Nazhan

Published on: January 24, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2401.13565

Summary

- What is new: Introduction of Mistral 7B, a large-scale language model with extended context lengths of 4096 to 32768 tokens and a special 16384 context length model, Malaysian Mistral, instruction-tuned for nuanced language intricacies.

- Why this is important: The need for more advanced language models that can understand and generate text over longer contexts, capturing finer language nuances.

- What the research proposes: Extending the pretraining context lengths and introducing a specialized instruction-tuned model, Malaysian Mistral, to enhance language understanding and generation capabilities.

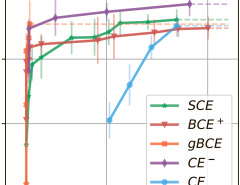

- Results: Malaysian Mistral showed superior performance on the Tatabahasa (Malay grammar) test set, outperforming leading models like ChatGPT3.5 and Claude 2, especially when fine-tuned.

Technical Details

Technological frameworks used: Pretraining of Mistral 7B with extended context lengths and instruction tuning.

Models used: Mistral 7B, ChatGPT3.5, Claude 2

Data used: 32.6 GB dataset equivalent to 1.1 billion tokens

Potential Impact

Language technology providers, educational platforms, content creation industries, and AI-based communication tools could benefit or face disruption.

Want to implement this idea in a business?

We have generated a startup concept here: PolyglotAI.

Leave a Reply