Authors: Can Cui, Zichong Yang, Yupeng Zhou, Yunsheng Ma, Juanwu Lu, Lingxi Li, Yaobin Chen, Jitesh Panchal, Ziran Wang

Published on: December 14, 2023

Impact Score: 8.45

Arxiv code: Arxiv:2312.09397

Summary

- What is new: Introduces Talk2Drive, the first real-world application of a Large Language Model (LLM) in autonomous driving for understanding and executing human verbal commands.

- Why this is important: Autonomous vehicles struggle with accurately understanding human commands and personalizing driving experiences.

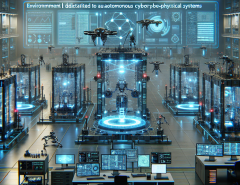

- What the research proposes: The Talk2Drive framework uses a speech recognition module to convert verbal commands to text, then applies LLMs for reasoning to execute these commands with a focus on safety, efficiency, and comfort.

- Results: Achieved a 100% success rate in command execution and reduced the takeover rate by up to 90.1% in real-world experiments.

Technical Details

Technological frameworks used: Talk2Drive

Models used: Large Language Models (LLMs), speech recognition module

Data used: Real-world verbal commands

Potential Impact

Autonomous vehicle manufacturers, ride-sharing companies, automotive safety systems developers

Want to implement this idea in a business?

We have generated a startup concept here: VocalVectors.

Leave a Reply