Authors: Rohin Manvi, Samar Khanna, Marshall Burke, David Lobell, Stefano Ermon

Published on: February 05, 2024

Impact Score: 8.27

Arxiv code: Arxiv:2402.0268

Summary

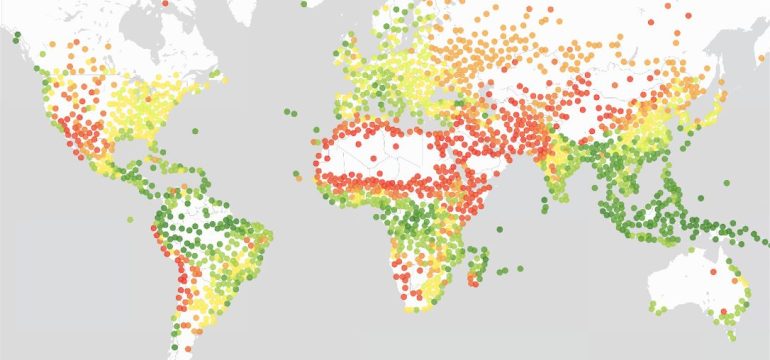

- What is new: This research introduces a novel approach to understanding large language models (LLMs) by examining their knowledge and biases through the lens of geography, using geospatial predictions to unveil how these models perpetuate biases based on locations.

- Why this is important: LLMs carry biases from their training data, which can lead to societal harm when these biases are perpetuated in their outputs.

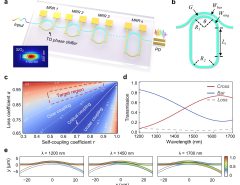

- What the research proposes: The study proposes analyzing LLM outputs through geospatial predictions to identify and assess geographic biases.

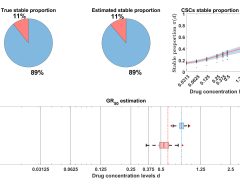

- Results: The research found that LLMs can make accurate geospatial predictions but exhibit significant biases against locations with lower socioeconomic conditions on various subjective topics.

Technical Details

Technological frameworks used: nan

Models used: Large Language Models (LLMs)

Data used: Geospatial data

Potential Impact

This research impacts companies using LLMs for geospatial predictions, content generation, and decision-making, potentially affecting tech giants, GIS providers, and sectors reliant on AI for location-based insights.

Want to implement this idea in a business?

We have generated a startup concept here: GeoEquity.

Leave a Reply