Authors: Duo Wu, Xianda Wang, Yaqi Qiao, Zhi Wang, Junchen Jiang, Shuguang Cui, Fangxin Wang

Published on: February 04, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2402.02338

Summary

- What is new: This work introduces NetLLM, the first framework to adapt large language models (LLMs) to networking tasks, aiming for a ‘one model for all’ approach that enhances performance and generalization across various networking problems.

- Why this is important: Current deep learning approaches in networking require intensive manual design for different tasks and often perform poorly on unseen data distributions.

- What the research proposes: NetLLM adapts LLMs for networking, leveraging their pre-trained knowledge and inference capabilities to address diverse networking problems efficiently and with strong generalization.

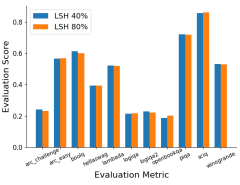

- Results: NetLLM outperforms state-of-the-art algorithms by 10.1-36.6% for viewport prediction, 14.5-36.6% for adaptive bitrate streaming, and 6.8-41.3% for cluster job scheduling, also exhibiting superior generalization across tasks.

Technical Details

Technological frameworks used: NetLLM

Models used: Large Language Models (LLMs) adapted for networking

Data used: Viewport Prediction (VP), Adaptive Bitrate Streaming (ABR), Cluster Job Scheduling (CJS)

Potential Impact

This innovation could disrupt markets related to cloud networking, streaming services, online gaming, and any business dependent on efficient cluster job scheduling and network traffic management.

Want to implement this idea in a business?

We have generated a startup concept here: NetworkNest.

Leave a Reply