Authors: Vinitra Swamy, Julian Blackwell, Jibril Frej, Martin Jaggi, Tanja Käser

Published on: February 05, 2024

Impact Score: 8.6

Arxiv code: Arxiv:2402.02933

Summary

- What is new: InterpretCC introduces a new approach to neural network interpretability by sparsely activating features based on human-specified topics, achieving human-centric interpretability without sacrificing model performance.

- Why this is important: Current methods for making neural networks interpretable either diminish trust, reduce explanation understandability, or compromise model performance, which is problematic for human-facing domains.

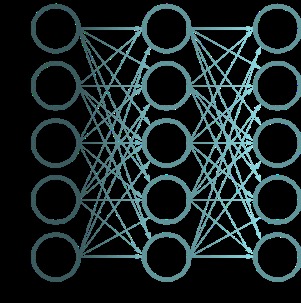

- What the research proposes: InterpretCC, an interpretable-by-design neural network architecture, which adaptively and sparsely activates features for better interpretability while maintaining high performance.

- Results: The architecture was tested on text and tabular data from various fields, showing it could achieve comparable performance to state-of-the-art models while providing actionable and trustworthy explanations.

Technical Details

Technological frameworks used: Interpretable Conditional Computation

Models used: Interpretable mixture-of-experts model

Data used: Text and tabular data from online education, news classification, breast cancer diagnosis, and review sentiment.

Potential Impact

Education, healthcare, digital media, and customer service industries could benefit from adopting InterpretCC for transparent and accurate predictions.

Want to implement this idea in a business?

We have generated a startup concept here: InterpretableAI.

Leave a Reply