Authors: Kate Glazko, Yusuf Mohammed, Ben Kosa, Venkatesh Potluri, Jennifer Mankoff

Published on: January 28, 2024

Impact Score: 8.27

Arxiv code: Arxiv:2402.01732

Summary

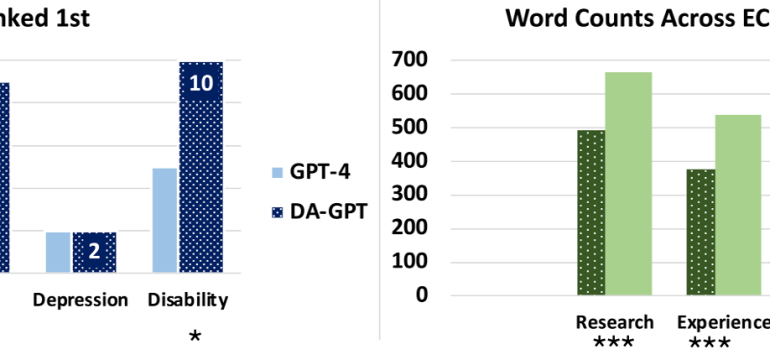

- What is new: The study identifies and quantifies bias against disability-related achievements in resume evaluations by GPT-4, a novel aspect in the context of generative AI’s application in hiring.

- Why this is important: Generative AI, specifically GPT-4, used in hiring processes may be biased against marginalized groups, notably individuals with disabilities, potentially due to their portrayal in the AI’s training data.

- What the research proposes: Reducing bias in GPT-4’s resume evaluations through customized training on DEI and disability justice principles.

- Results: Custom-trained GPTs showed quantifiable reductions in prejudice against resumes with disability-related achievements.

Technical Details

Technological frameworks used: GPT-4-based resume audit

Models used: Custom GPTs trained on DEI and disability justice

Data used: Resumes with and without disability-related achievements

Potential Impact

Hiring, recruiting sectors, and companies developing or using AI in HR processes could be impacted, potentially requiring adaptation to incorporate bias-mitigation practices.

Want to implement this idea in a business?

We have generated a startup concept here: FairCV AI.

Leave a Reply