Authors: Tsu-Jui Fu, Wenze Hu, Xianzhi Du, William Yang Wang, Yinfei Yang, Zhe Gan

Published on: September 29, 2023

Impact Score: 8.07

Arxiv code: Arxiv:2309.17102

Summary

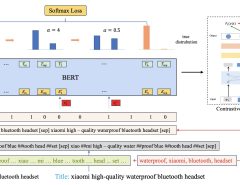

- What is new: The research introduces MGIE, a new method that uses Multimodal Large Language Models for better understanding and following brief human instructions for image editing.

- Why this is important: Current instruction-based image editing methods struggle with brief human instructions.

- What the research proposes: MGIE, which leverages MLLMs to derive expressive instructions for image editing, providing explicit guidance for the editing model.

- Results: MGIE showed notable improvements in Photoshop-style modifications, global photo optimization, and local editing according to automatic metrics and human evaluation, while keeping inference efficiency competitive.

Technical Details

Technological frameworks used: Multimodal Large Language Models (MLLMs)

Models used: MGIE (MLLM-Guided Image Editing)

Data used: Image editing instructions and corresponding image data

Potential Impact

This technology could disrupt the graphic design software market, benefiting companies involved in digital content creation, image editing software, and AI-driven creative tools.

Want to implement this idea in a business?

We have generated a startup concept here: ArtiEdit.

Leave a Reply