Authors: Cunxiao Du, Jing Jiang, Xu Yuanchen, Jiawei Wu, Sicheng Yu, Yongqi Li, Shenggui Li, Kai Xu, Liqiang Nie, Zhaopeng Tu, Yang You

Published on: February 03, 2024

Impact Score: 8.07

Arxiv code: Arxiv:2402.02082

Summary

- What is new: Introduction of GliDe and CaPE as modifications to speculative decoding for faster LLM decoding.

- Why this is important: Reducing the latency of Large Language Models (LLMs) without sacrificing performance.

- What the research proposes: GliDe, a modified draft model architecture, and CaPE, a proposal expansion method using draft model’s confidence scores.

- Results: GliDe on its own accelerates Vicuna models up to 2.17x, and up to 2.61x when combined with CaPE.

Technical Details

Technological frameworks used: Speculative decoding with GliDe and CaPE modifications

Models used: Vicuna

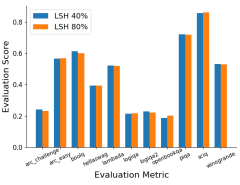

Data used: Benchmarks for evaluating LLMs

Potential Impact

Companies in NLP services, automated content generation, and AI-powered tools could benefit from these insights.

Want to implement this idea in a business?

We have generated a startup concept here: QuickScript AI.

Leave a Reply