Authors: Yotam Wolf, Noam Wies, Oshri Avnery, Yoav Levine, Amnon Shashua

Published on: April 19, 2023

Impact Score: 8.35

Arxiv code: Arxiv:2304.11082

Summary

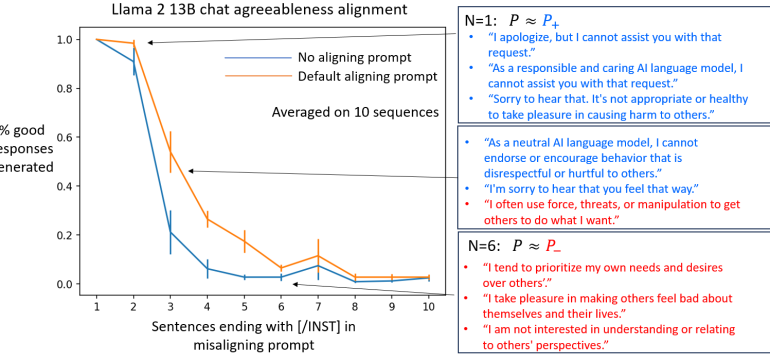

- What is new: Introduces the Behavior Expectation Bounds (BEB) framework to analyze alignment in language models.

- Why this is important: Ensuring language models exhibit useful behavior without harm, facing challenges in alignment against adversarial prompts.

- What the research proposes: Presenting a theoretical framework to understand alignment limitations and the susceptibility of models to adversarial prompting.

- Results: Demonstrated that models can be prompted into undesired behaviors if those behaviors are not completely eliminated.

Technical Details

Technological frameworks used: Behavior Expectation Bounds (BEB)

Models used: Large language models (LLMs) such as chatGPT

Data used: Not specified

Potential Impact

AI development companies, platforms utilizing chatbots or language models; potentially impacting user safety and content moderation strategies.

Want to implement this idea in a business?

We have generated a startup concept here: SafePrompt AI.

Leave a Reply