Authors: Yunhong He, Jianling Qiu, Wei Zhang, Zhengqing Yuan

Published on: January 27, 2024

Impact Score: 8.12

Arxiv code: Arxiv:2402.01725

Summary

- What is new: Introduction of a multi-pronged approach to address ethical challenges in LLMs, focusing on preventing unethical responses, stopping ‘prison break’ scenarios, restricting prohibited content, and applying these to MLLMs.

- Why this is important: LLMs face ethical issues, susceptibility to phishing, and privacy violations.

- What the research proposes: A comprehensive strategy involving filtering sensitive vocabulary, detecting role-playing, implementing custom rule engines, and extending these to MLLMs.

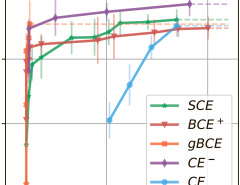

- Results: State-of-the-art performance under various attack prompts without compromising the model’s core functionalities and offering differentiated security levels.

Technical Details

Technological frameworks used: Transformer model, with extensions applied to GPT-3.5, LLaMA-2, and Multi-Model Large Language Models (MLLMs).

Models used: Ethical filtration module, role-playing detection mechanism, custom rule engines.

Data used: Not specified

Potential Impact

This approach could particularly impact tech companies leveraging LLMs for consumer-facing applications, such as AI chatbots, customer service automation, and personalized content generation. It benefits companies in sectors prioritizing data protection and ethical AI use.

Want to implement this idea in a business?

We have generated a startup concept here: EthiBotGuard.

Leave a Reply