Authors: Yuxuan Huang, Lida Shi, Anqi Liu, Hao Xu

Published on: December 18, 2023

Impact Score: 8.15

Arxiv code: Arxiv:2312.11282

Summary

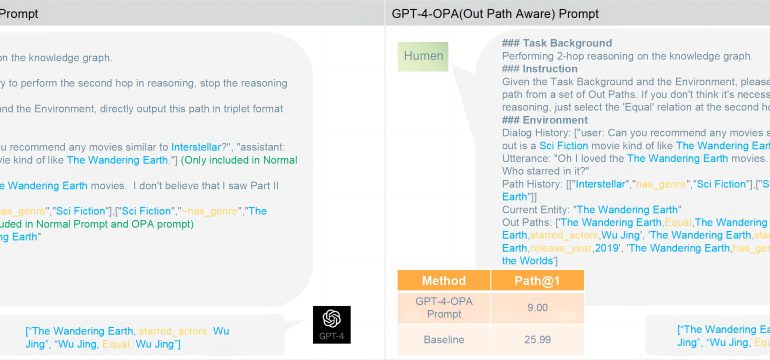

- What is new: Introduction of LLM-ARK, a language model that utilizes Full Textual Environment prompts and Proximal Policy Optimization for better reasoning on Knowledge Graphs.

- Why this is important: Existing Large Language Models, including GPT-4, struggle with conversational reasoning on Knowledge Graphs due to limited KG environment awareness and optimization challenges.

- What the research proposes: LLM-ARK, a model that leverages a novel prompt structure and reinforcement learning to improve reasoning accuracy.

- Results: LLaMA-2-7B-ARK outperformed GPT-4 by 5.28 percentage points with a performance rate of 36.39% on the target@1 metric.

Technical Details

Technological frameworks used: Proximal Policy Optimization

Models used: LLM-ARK, GPT-4

Data used: OpenDialKG dataset

Potential Impact

Tech companies focused on AI-driven conversational agents, knowledge graph technologies, customer service automation.

Want to implement this idea in a business?

We have generated a startup concept here: GraphWise.

Leave a Reply