Authors: Shiyuan Yang, Liang Hou, Haibin Huang, Chongyang Ma, Pengfei Wan, Di Zhang, Xiaodong Chen, Jing Liao

Published on: February 05, 2024

Impact Score: 8.15

Arxiv code: Arxiv:2402.03162

Summary

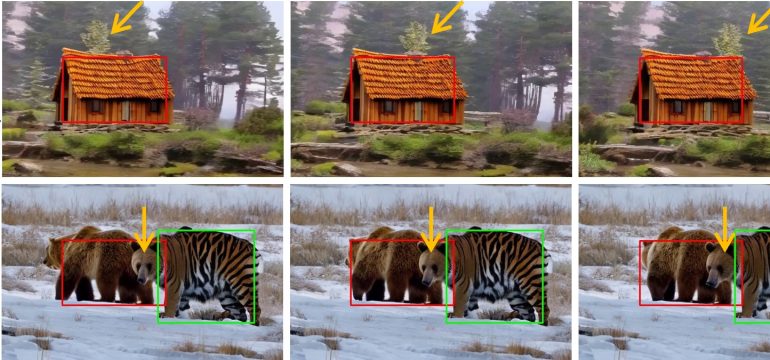

- What is new: Introduction of Direct-a-Video, a system that enables separate control of object motion and camera movement for video creation.

- Why this is important: Lack of flexibility in controlling object motion and camera movement independently in text-to-video models.

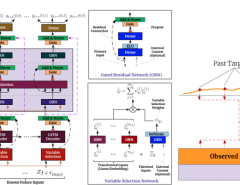

- What the research proposes: Direct-a-Video system with spatial cross-attention for object motion and temporal cross-attention layers for camera movement, trained in a self-supervised manner.

- Results: The system shows superior performance in providing decoupled control over object motion and camera movement, proving effective in open-domain scenarios.

Technical Details

Technological frameworks used: nan

Models used: Spatial and temporal cross-attention models

Data used: Small-scale dataset for self-supervised learning

Potential Impact

Film and video production software markets, content creation platforms, and companies in the virtual reality and augmented reality sectors.

Want to implement this idea in a business?

We have generated a startup concept here: CineMancer.

Leave a Reply