Authors: YuHe Ke, Liyuan Jin, Kabilan Elangovan, Hairil Rizal Abdullah, Nan Liu, Alex Tiong Heng Sia, Chai Rick Soh, Joshua Yi Min Tung, Jasmine Chiat Ling Ong, Daniel Shu Wei Ting

Published on: January 29, 2024

Impact Score: 8.52

Arxiv code: Arxiv:2402.01733

Summary

- What is new: The development of an LLM-RAG model specifically customized for the healthcare sector, majorly focusing on preoperative medicine, showing non-inferiority to human-generated responses.

- Why this is important: The need for a faster and accurate automated system for generating preoperative medical instructions.

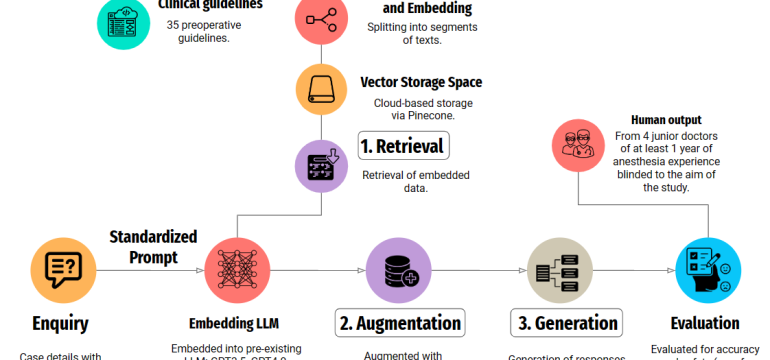

- What the research proposes: A tailored LLM-RAG pipeline utilizing 35 preoperative guidelines to generate responses, significantly reducing response time compared to human efforts.

- Results: A demonstration of the LLM-RAG model achieving an accuracy of 91.4%, outperforming basic LLMs and showing comparable results to junior doctors’ instructions.

Technical Details

Technological frameworks used: Python-based frameworks like LangChain and Llamaindex, Pinecone for vector storage

Models used: GPT4.0 enhanced with Retrieval Augmented Generation (RAG)

Data used: 35 preoperative guidelines

Potential Impact

Healthcare technology companies, specifically those developing or using medical AI applications, telemedicine services, and electronic health record systems

Want to implement this idea in a business?

We have generated a startup concept here: PreOpAI.

Leave a Reply