Authors: Dmytro Valiaiev

Published on: January 02, 2024

Impact Score: 8.45

Arxiv code: Arxiv:2402.01642

Summary

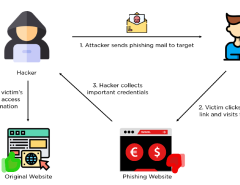

- What is new: This survey provides a comprehensive overview of the developments in identifying and mitigating the issues arising from machine-generated text, focusing on recent advancements in natural language generation and generative pre-trained transformers.

- Why this is important: The challenge of distinguishing between text generated by humans and advanced language models, due to their increased sophistication and the consequent oversupply of machine-generated content in the public domain.

- What the research proposes: Implementing automated systems and possibly reverse-engineered language models to empower human agents to differentiate between artificial and human-created texts, while also considering the socio-technological impacts of these technologies.

- Results: The paper synthesizes accomplishments in the field and explores the societal implications of machine-generated texts, aiming to foster the development of methods to address the challenges posed by the use of advanced language models.

Technical Details

Technological frameworks used: Natural Language Generation (NLG), Generative Pre-trained Transformer (GPT) models.

Models used: Reverse-engineered language models for text distinction.

Data used: Machine-generated text trends and examples.

Potential Impact

Journalism, customer service, and academic sectors could be disrupted, while companies developing NLG and GPT models could benefit.

Want to implement this idea in a business?

We have generated a startup concept here: AuthentiText.

Leave a Reply