Authors: Sheng Luo, Wei Chen, Wanxin Tian, Rui Liu, Luanxuan Hou, Xiubao Zhang, Haifeng Shen, Ruiqi Wu, Shuyi Geng, Yi Zhou, Ling Shao, Yi Yang, Bojun Gao, Qun Li, Guobin Wu

Published on: February 05, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2402.02968

Summary

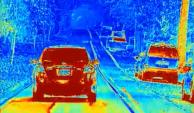

- What is new: This paper analyses multi-modal multi-task visual understanding foundation models (MM-VUFMs) specifically for road scenes, emphasizing their adaptability and advanced capabilities in diverse learning paradigms.

- Why this is important: The need for intelligent vehicles to effectively process and fuse data from diverse modalities for better scene understanding and handling various driving-related tasks.

- What the research proposes: Systematic analysis of MM-VUFMs designed for road scenes, offering comprehensive insights into task-specific models, unified models, and prompting techniques.

- Results: Advancements in visual understanding for intelligent vehicles through MM-VUFMs, highlighting their multi-modal and multi-task learning capabilities.

Technical Details

Technological frameworks used: Foundation models

Models used: Multi-modal multi-task visual understanding foundation models (MM-VUFMs)

Data used: Data from diverse modalities relevant to road scenes

Potential Impact

Automobile industry, Intelligent vehicle manufacturers, Autonomous driving technology companies

Want to implement this idea in a business?

We have generated a startup concept here: DriveIntelli.

Leave a Reply