Authors: Mingde Zhao, Safa Alver, Harm van Seijen, Romain Laroche, Doina Precup, Yoshua Bengio

Published on: September 30, 2023

Impact Score: 8.22

Arxiv code: Arxiv:2310.00229

Summary

- What is new: Skipper introduces spatio-temporal abstractions for model-based reinforcement learning, enabling it to generalize learned skills in novel situations better than current methods.

- Why this is important: Existing reinforcement learning agents struggle with generalizing learned skills to new, unencountered scenarios.

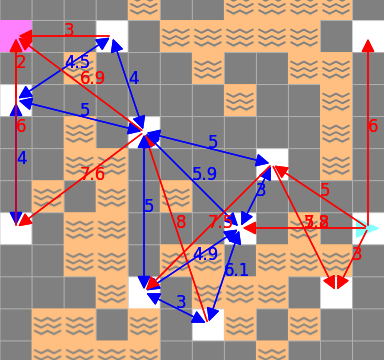

- What the research proposes: A model-based reinforcement learning agent, named Skipper, uses spatio-temporal abstractions to break down tasks into smaller subtasks, allowing it to focus on relevant parts of the environment for decision-making.

- Results: Skipper demonstrated a significant advantage in zero-shot generalization across various tasks, outperforming current hierarchical planning methods.

Technical Details

Technological frameworks used: Model-based reinforcement learning

Models used: Spatio-temporal abstractions, directed graph representations

Data used: Hindsight data for end-to-end learning

Potential Impact

Skipper’s approach could disrupt markets that rely on AI for planning and decision-making, such as logistics, autonomous vehicles, and robotics, offering enhanced adaptability and efficiency.

Want to implement this idea in a business?

We have generated a startup concept here: AdaptAI.

Leave a Reply