Authors: Louis Lippens

Published on: September 14, 2023

Impact Score: 8.6

Arxiv code: Arxiv:2309.07664

Summary

- What is new: This paper provides evidence on ethnic and gender bias in ChatGPT evaluations of job applicants, highlighting a nuanced pattern of discrimination based on the type of job and the applicant’s demographic characteristics.

- Why this is important: The issue of perpetuating systemic biases in language models, specifically ChatGPT, during the assessment of job applicants.

- What the research proposes: Using a correspondence audit approach to evaluate the potential ethnic and gender biases of ChatGPT in a CV screening task.

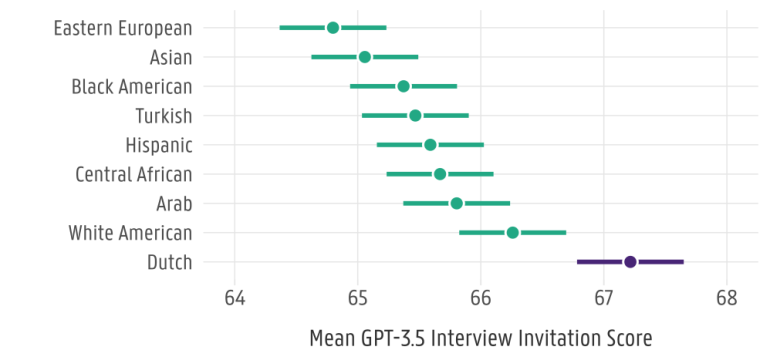

- Results: Ethnic and gender biases were observed, with ethnic discrimination being more significant in jobs with better conditions or higher language requirements, and gender discrimination appearing in gender-atypical roles.

Technical Details

Technological frameworks used: Correspondence audit approach from social sciences

Models used: ChatGPT language model

Data used: Simulated 34,560 vacancy-CV combinations representing diverse ethnic and gender identities

Potential Impact

HR technology companies, recruitment software providers, and organizations leveraging AI for personnel selection could be affected. They should consider the implications of systemic bias in their tools.

Want to implement this idea in a business?

We have generated a startup concept here: EquiHire.

Leave a Reply