Authors: Yi Dong, Ronghui Mu, Gaojie Jin, Yi Qi, Jinwei Hu, Xingyu Zhao, Jie Meng, Wenjie Ruan, Xiaowei Huang

Published on: February 02, 2024

Impact Score: 8.2

Arxiv code: Arxiv:2402.01822

Summary

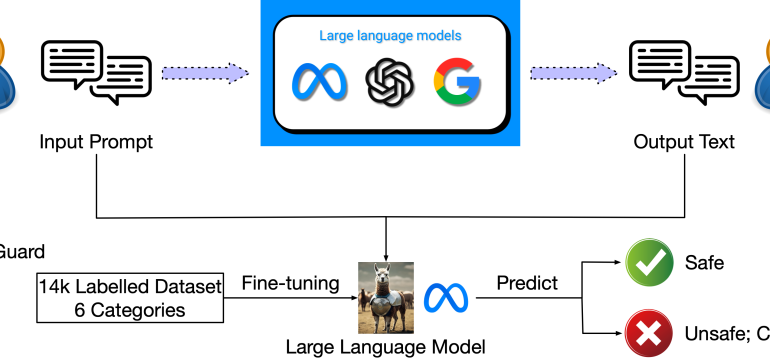

- What is new: This paper introduces a systematic approach to constructing guardrails for LLMs, advocating for socio-technical methods and advanced neural-symbolic implementations.

- Why this is important: The integration of Large Language Models (LLMs) into daily life poses risks that need mitigation to prevent profound impacts on users and societies.

- What the research proposes: A systematic construction of guardrails through multi-disciplinary collaboration, focusing on socio-technical methods and neural-symbolic implementations.

- Results: Proposed methods aim to provide a comprehensive framework for safer LLM applications, although specific outcome metrics are not provided.

Technical Details

Technological frameworks used: Socio-technical frameworks, neural-symbolic implementations

Models used: Llama Guard, Nvidia NeMo, Guardrails AI

Data used: Previous research evidence

Potential Impact

This research could disrupt markets related to content moderation, social media platforms, customer service automation, and companies producing or deploying LLMs.

Want to implement this idea in a business?

We have generated a startup concept here: SafeSpeak AI.

Leave a Reply