Authors: Monika Henzinger, Jalaj Upadhyay, Sarvagya Upadhyay

Published on: November 09, 2022

Impact Score: 8.38

Arxiv code: Arxiv:2211.05006

Summary

- What is new: Introduces a novel mechanism for private federated learning that considerably reduces error compared to the binary mechanism.

- Why this is important: Need to reduce the privacy parameter in private federated learning to achieve a concrete bound on the error.

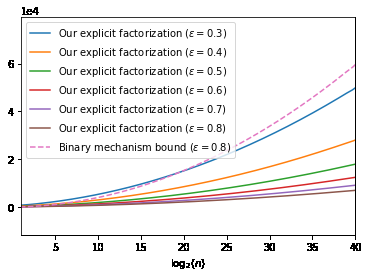

- What the research proposes: A new mechanism that is asymptotically optimal and reduces the mean squared error by a factor of 10 compared to the binary mechanism.

- Results: Demonstrated almost tight constants in error analysis and provided the first tight lower bound on continual counting under approximate differential privacy.

Technical Details

Technological frameworks used: Matrix mechanism for the counting matrix, with constant time per release.

Models used: Uses an explicit factorization of the counting matrix and a novel lower bound on a factorization norm.

Data used: nan

Potential Impact

Could disrupt markets relying on federated learning, like mobile device manufacturers, predictive text applications, and privacy-focused tech companies.

Want to implement this idea in a business?

We have generated a startup concept here: SecureQuery AI.

Leave a Reply