Authors: Changrong Xiao, Wenxing Ma, Sean Xin Xu, Kunpeng Zhang, Yufang Wang, Qi Fu

Published on: January 12, 2024

Impact Score: 8.52

Arxiv code: Arxiv:2401.06431

Summary

- What is new: This study introduces the use of advanced Large Language Models (LLMs) like GPT-4 and fine-tuned GPT-3.5 for Automated Essay Scoring (AES), outperforming traditional models.

- Why this is important: The need for immediate and personalized feedback for second-language learners where human instructors aren’t available.

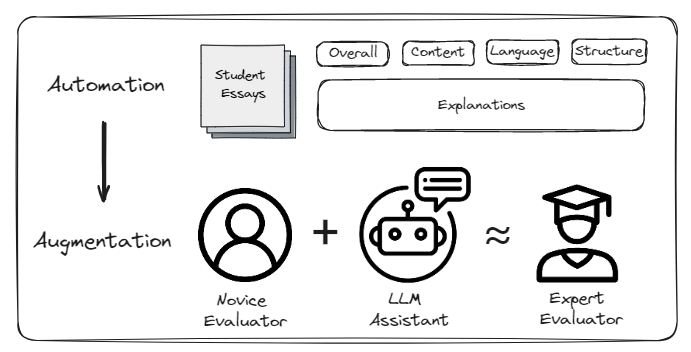

- What the research proposes: Utilizing GPT-4 and fine-tuned GPT-3.5 for AES, providing accurate and consistent automated grading, as well as aiding human graders to improve their performance.

- Results: LLMs demonstrated superior accuracy, consistency, generalizability, and interpretability in essay scoring. Additionally, LLM-assisted human graders, both novice and expert, showed improved grading performance.

Technical Details

Technological frameworks used:

Models used: GPT-4, fine-tuned GPT-3.5

Data used: Public and private datasets

Potential Impact

Educational technology, language learning platforms, standardized testing companies, and EdTech AI development companies.

Want to implement this idea in a business?

We have generated a startup concept here: EssayGenius.

Leave a Reply